Today I had an opportunity to take part in the panel Technical vs Content during first day of Sascon Manchester ; We were discussing what has the biggest impact on organic performance, the technical architecture of a website, or the content.? A view on the market concensus on where you should be spending your time. Speakers included: myself on behalf of iProspect Manchester; James English, BBC and Edward Cowell, Mediacom.

The panel for this session comprises of Radek Kowalski Group Technical Search Manager from iProspect Manchester, Teddie Cowell, Director of SEO at MediaCom and James English, Senior SEO Analyst for BBC Sport.

Everyone on the panel believes that the content vs technical debate is an interesting one. It’s something that is sometimes a bit of a contentious issue, but it’s one that always needs to be considered when planning an SEO campaign or organic strategy.

Technical SEO has moved away from just a tech audit to mobile, platform builds and commerce platforms which takes tech away from short term projects to more long-term tech SEO projects, according to Radek, which makes having technical knowledge a requirement for an SEO.

So, how much do other people within agencies know or care about SEO?

At MediaCom, SEO is a core focus within other departments’ agendas. There is a big desire within the industry to get content marketing to work harder. SEOs in general have a really good understanding of how the web works. The majority of the budgets being spent on brands aren’t being spent on SEO; they’re being spent on brand budgets.

SEO considerations are increasingly becoming a part of brand marketing conversations from the very outset because SEOs are good at joining the pieces of a campaign together from the beginning. SEO helps to maximise value from budgets.

There has been a huge change in SEO over the last three years, says Radek, moving heavily towards content. In fact, people are becoming obsessed with content: how to do it better, how to get better ROI. iProspect Manchester has become very good at creating SEO content which supports massive campaigns. Technical optimisation can’t be forgotten. It’s almost like a vehicle for how content can be deployed. If the range of clients is small it can be very easy to technically deploy content, but if you’re trying to work on massive eCommerce systems for very large brands it can be difficult to deploy content. The technical element to content-based SEO is now more important because it has to work with your content.

You could have good content with a good awareness but it could be more successful if you have the technical element sorted.

Whatever you do for a project you have to make key stakeholders happy by educating them about how SEO can make things better but still keeping technical standards high.

Educating clients of the value of SEO is one of the most important aspects of any SEO campaign, says Teddie.

SEO at the BBC

One of the most interesting things about doing SEO at the BBC is being able to see that they are competing on a different landscape – they’ve got the brand authority but it’s more about the way people are able to find the content that is becoming one of the challenges, as is the growing amount of competition the BBC has for news. They can’t just rely on the fact people will be searching for their content via brand searches. Its easy to become complacent but it is a highly competitive landscape.

Some big technical SEO priorities for the BBC at the moment are sitemaps and newsfeeds as well as being able to react quickly to big news stories and high profile events such as the World Cup or Olympics.

Educating clients

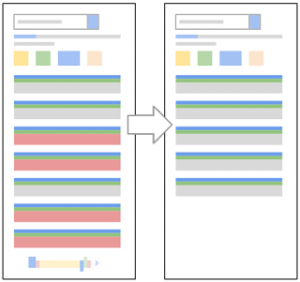

We can create a piece of content that is technically sound but it still needs to go on a client’s website and that is generally when errors and problems appear, says Radek. Changing wireframes can be expensive and daunting for clients. Similarly, UX does not always go alongside technical SEO priorities. Some elements that are great for UX may not work well with the UI and can break on different devices such as mobile or tablet.

Teddie believes it is an ongoing process because IT professionals sometimes have different ideas and different priorities to people working in and doing SEO.

What would you say works well for smaller websites? Should they focus on technical or content?

According to Radek, small sites with fewer than 500 landing pages should choose a good platform like WordPress with free plugins which can do 80% of technical work for you.

The smaller the site is, the more important the technical excellence is for you. He has seen small sites with no backlinks and a really well optimised technical elements that are ranking really well. Sites need to comply with technical SEO standards and make sure themes pass site validations for mobile friendliness and page speed amongst others.

Teddie agrees: there is still the role for technical SEO for smaller sites. They need to consider essential technical things including consistency for URL and header elements like h1s.

When you see content being produced that is great, what do you see are key technical elements that are often forgotten or missed?

With storytelling content using parallax pages, which is a popular design trend, the technical issue for SEOs is that is parallax pages are losing performance for searchers and some search queries.

James feels that it is important to experiment with different content formats to try to give the best experience for the user online. We are always trying to match the best experience for the user and match it with the site hierarchy.

Something designers love is Flash and JS but try to stick to HTML5. This is because it’s very flexible and easy to digest for the search engines. Sometimes different types of content make a page not mobile friendly and you are running the risk of making the page unconsumable on certain devices, says Radek.

70% of your audience may access your content using mobile (think about that). If you work in an industry that focuses on young adults they don’t really care about desktops, laptops or even tablets. They see the world via mobile phones and any campaign should be mobile friendly.

You have to remember that different clients have different issues, says Teddie. Not every brand was well prepared for the mobile update app. What Google did by forcing the point of the mobile agenda was a really good thing to do. Brands needed a wakeup call and that’s what the Google update did.

How much of a role does UX play for SEO?

SEOs need to define the two different elements – UI and UX. User interface will help to make your site more accessible and more compliant. BBC has been focused for years on making their website accessible for different users. BBC creative should be used as a standard for accessibility UI.

Google has been giving people massive amounts of technical information and guidance which people should be listening to. Google will start to become more and more picky, and is giving messages like “be mobile friendly”, “be clean”, “provide a good UX”. This is because they care, but they want to save their own money because their infrastructure to crawl the www most likely costs billions.

What is content to you? What is a good piece of content, what are you talking to clients about?

At MediaCom content can be lots of things, says Teddie. Anything that connects a brand to their consumers or something that people choose to engage with such as YouTube, press partnership or the website itself. It’s about how the users are moving around the content.

James believes like Teddie that it is anything that connects users to the brand. He tries not to use the word content much around journalists because to journalists content is like a dirty marketing term. They call content other words such as features, articles, interviews, video content, TV content.

The final question for the panel was a good one.

Where do you spend a limited budget that has to get results? Technical or content? Consider that the client is a retail company of a medium size.

James: Technical

Teddie: Depends on the nature of the business. but for medium size retail, technical.

Radek: investing in technical can be immediately seen as having a positive impact.